- content admin

- Articles

- How Twin Talk retrieves and transforms PI System data.

- How to configure Databricks to receive industrial time-series data.

- How to establish secure connections and automate data pipelines.

- The benefits of using AI/ML in Databricks on industrial data.

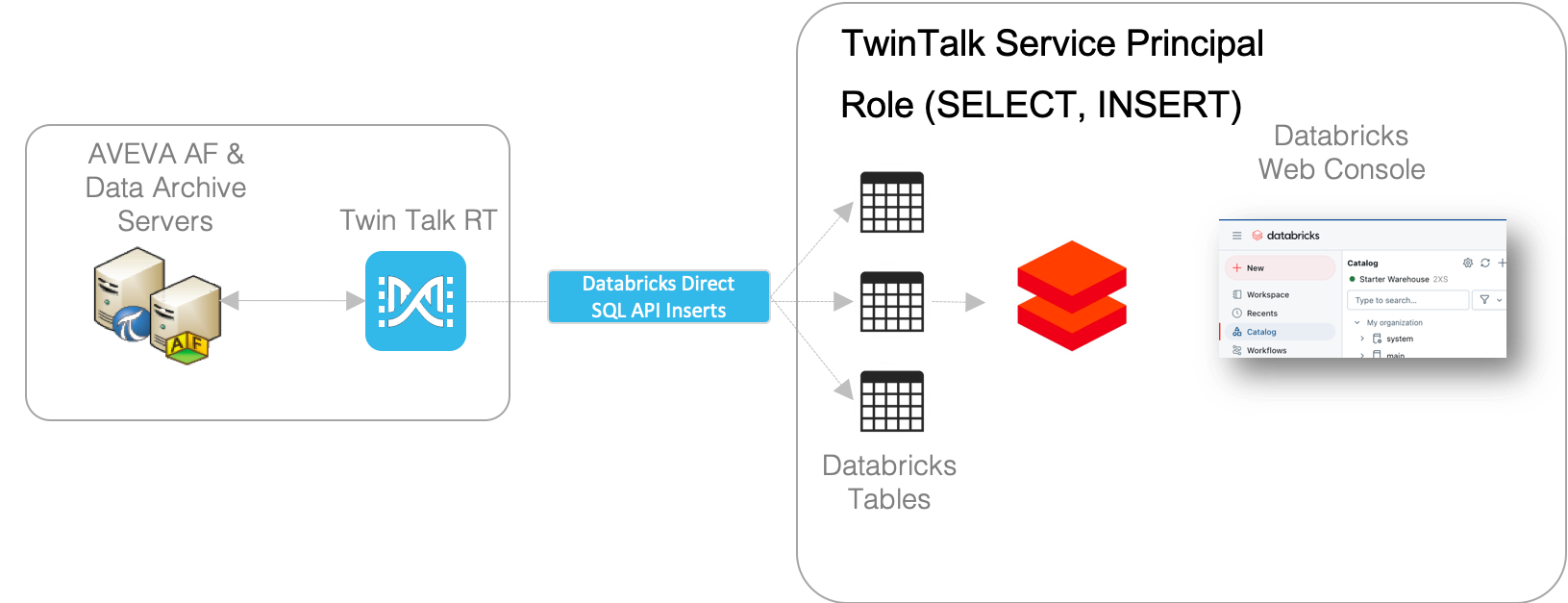

Understanding the Integration Architecture

AVEVA PI Systems Overview

AVEVA PI Systems store real-time and historical industrial data, typically structured as:Twin Talk’s Role

Twin Talk acts as the middleware that connects AVEVA PI Systems with Databricks by:Why Databricks?

Databricks provides:Step-by-Step Guide: Moving Data to Databricks

Preparing Databricks for Twin Talk Ingestion

Before Twin Talk can send data, Databricks must be configured to receive it.Step 1: Create a Databricks Service Principal

A Service Principal is required to authenticate Twin Talk with Databricks:

- Log in to Databricks Account Console.

- Navigate to User Management > Service Principals.

- Click Add Service Principal, provide a name, and create it.

- Under “OAuth secrets”, click Generate Secret, and securely store it.

Step 2: Assign Required Roles & Privileges

Grant SELECT and INSERT privileges to the Twin Talk Service Principal.

- Assign roles within Unity Catalog for controlled access.

- Enable audit logs to track data access and usage.

Step 3: Define Databricks Tables & Schemas

The structure of the landing tables in Databricks should march the industrial format

CREATE TABLE realtime_historian (

hierarchy_path STRING,

measure_name STRING,

measure_value DOUBLE,

timestamp TIMESTAMP

);

Configuring Twin Talk for Data Ingestion

Step 4: connect twin talk to the af server

<add key=”PiSystemName” value=”<AF server IP or domain>” />

<add key=”AfDataBaseName” value=”<AF database name>” />

<add key=”AfUserName” value=”<AF service account username>” />

<add key=”AfPassword” value=”<encrypted password>” />

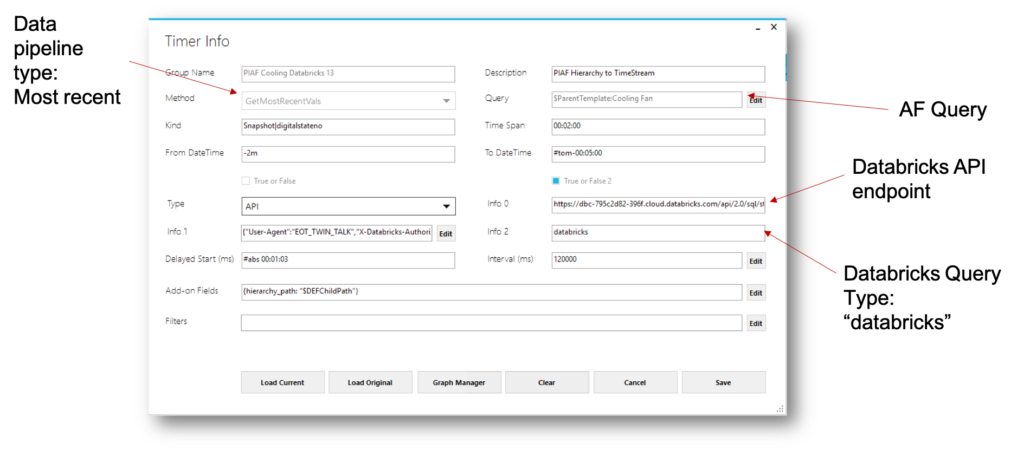

Step 5: Configure Twin Talk Queries

Tip: Queries are designed to select specific PI Points based on templates and metadata.

Tip: Data transformation can include pivoting, aggregation, and filtering.

Automating data transfer to databricks

Step 6: Establish Secure API Communication

In the Twin Talk UI, set up the API parameters for Databricks:

{

“User-Agent”: “EOT_TWIN_TALK”,

“X-Databricks-Authorization-Token-Type”: “KEYPAIR_JWT”,

“Content-Type”: “application/json”,

“tt_statement”: “INSERT INTO realtime_historian VALUES $VALUES”

}

- Tip – This ensures secure, encrypted data transmission.

- Tip – Twin Talk directly inserts data using Databricks SQL API

Step 7: Execute the Data Pipeline

Once Twin Talk is connected and configured:

The Insert SQL Creator in Twin Talk helps generate custom SQL statements for Databricks.

The AF Query Timer ensures continuous, scheduled ingestion of real-time industrial data.

Step 8: Validate and Monitor Data Flow

SELECT * FROM realtime_historian LIMIT 10;

Check audit logs to ensure successful ingestion:

SELECT * FROM system.access.audit WHERE user_agent LIKE ‘EOT_TWIN_TALK’;

Results: Unlocking the Power of Industrial Data in Databricks

Once your data is flowing into Databricks, you can:

- Analyze Operational Trends

- Use SQL queries or Apache Spark for in-depth analysis.

- Detect anomalies in sensor data.

- Apply AI/ML for Predictive Maintenance

- Train ML models to predict equipment failures.

- Optimize production schedules using Databricks AutoML.

- Integrate with Business Intelligence Tools

- Use Power BI or Tableau for real-time dashboards.

- Correlate industrial data with business KPIs.

conclusion

Moving time-series data from AVEVA PI Systems into Databricks using Twin Talk unlocks new possibilities for industrial analytics. By leveraging Databricks’ cloud-based AI and ML capabilities, you can optimize operations, reduce downtime, and gain real-time insights from your industrial data.

By following this guide, you have: Configured Databricks to receive industrial data. Set up Twin Talk for real-time data ingestion. Established a scalable data pipeline. Enabled AI-driven predictive analytics.

Now, you can start applying advanced data science and machine learning on your industrial data to drive operational excellence!

Ready to get started?

Implement your first data pipeline today and explore the power of AI!

Download a full break down of how to create data pipelines from the PI System to Databricks here:

Twin Talk Aveva – Databricks Data Pipelines

Enterprise Industrial Platform

Why an enterprise industrial platform beats siloed tools — and unlocks aifor operations Point solutions that pull directly from historians or SCADA solve one problem

Reimagining industrial intelligence: How cloud historians can disrupt the automation landscape

EOT.AI’s “Twin Fusion” redefines industrial data handling – merging AI, cloud-native scalability, and real-time intelligence.

Learn how this disruptive approach changes the automation game.

Using ChatGPT to Build an IIoT Anomaly Detection Model and Export to ONNX for TwinSight

In this article, we’ll walk through how to use ChatGPT to create a Python program that: Loads and processes IIoT time series data, Trains an anomaly detection model to detect overheating, Converts the trained model to ONNX format,

Prepares it for deployment using TwinSight Machine Learning Workbench

Beyond data: What ‘Integrated Information Theory’ can teach us about AI and Digital Twins

Discover how Integrated Information Theory [IIT] reshapes the future of AI and Digital Twins.

Learn why connecting and integrating data is the key to building intelligent, adaptive operations.

From data silos to real-time twins – Why 2025 is the year of operational AI

AI isn’t just a trend – it’s transforming how industrial leaders harness their data.

Here’s how EOT.AI helps teams scale innovation from the shop floor to the cloud.

Reflections from AVEVA World 2025 – EOT.AI at the frontier of industrial AI

Explore EOT.AI’s key takeaways from AVEVA World 2025 in San Francisco.

Learn how industrial AI, digital twins, edge computing, and generative AI are shaping the future of data-driven operations.

Enterprise Industrial Platform

Why an enterprise industrial platform beats siloed tools — and unlocks aifor operations Point solutions that pull directly from historians or SCADA solve one problem

Reimagining industrial intelligence: How cloud historians can disrupt the automation landscape

EOT.AI’s “Twin Fusion” redefines industrial data handling – merging AI, cloud-native scalability, and real-time intelligence.

Learn how this disruptive approach changes the automation game.

Using ChatGPT to Build an IIoT Anomaly Detection Model and Export to ONNX for TwinSight

In this article, we’ll walk through how to use ChatGPT to create a Python program that: Loads and processes IIoT time series data, Trains an anomaly detection model to detect overheating, Converts the trained model to ONNX format,

Prepares it for deployment using TwinSight Machine Learning Workbench

Beyond data: What ‘Integrated Information Theory’ can teach us about AI and Digital Twins

Discover how Integrated Information Theory [IIT] reshapes the future of AI and Digital Twins.

Learn why connecting and integrating data is the key to building intelligent, adaptive operations.

From data silos to real-time twins – Why 2025 is the year of operational AI

AI isn’t just a trend – it’s transforming how industrial leaders harness their data.

Here’s how EOT.AI helps teams scale innovation from the shop floor to the cloud.

Reflections from AVEVA World 2025 – EOT.AI at the frontier of industrial AI

Explore EOT.AI’s key takeaways from AVEVA World 2025 in San Francisco.

Learn how industrial AI, digital twins, edge computing, and generative AI are shaping the future of data-driven operations.